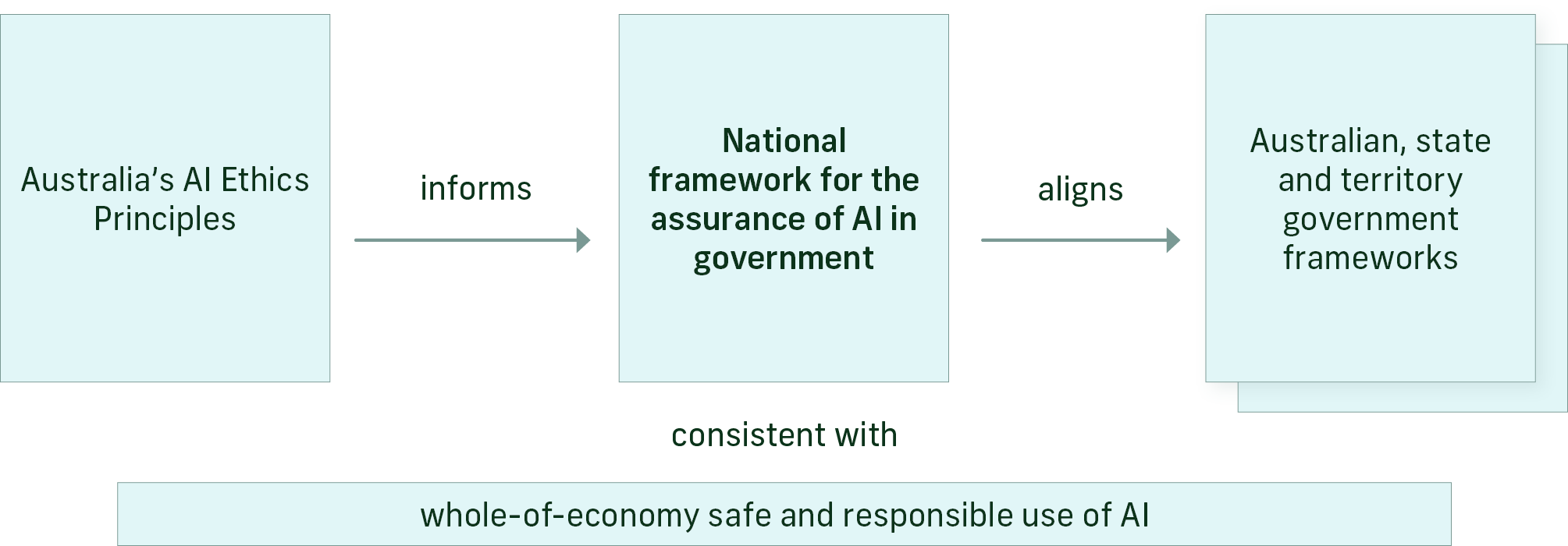

The national framework for the assurance of AI in government provides for a nationally consistent approach for the assurance of artificial intelligence use in government.

Based on Australia’s AI Ethics Principles (DISR 2019), and consistent with broader work on safe and responsible AI, the framework establishes cornerstones and practices of AI assurance. Instead of focusing on technical detail, the framework sets foundations across all aspects of government, with jurisdictions to develop specific policies and guidance considerate of their own legislative, policy and operational context.

Assurance is an essential part of the broader governance of how governments use AI, including its development, procurement and deployment. This enables governments to:

- understand the expected benefits of AI

- identify risks and apply mitigations

- ensure lawful use

- understand if AI is operating as expected

- demonstrate, through evidence, that the use of AI is safe and responsible.

As the Australian, state and territory governments continue to use AI, they will likely develop new or improved assurance practices based on their unique successes, vulnerabilities and impacts.

These learnings will be shared and incorporated into future iterations of this framework.

Complementary initiatives

The national framework for the assurance of AI in government complements local and global initiatives on the safe and responsible use of AI, both by governments and in wider economies. The Australian, state and territory governments will consider these and other initiatives as they develop their unique assurance approaches.

Australia’s AI Ethics Framework

First published in 2019 and developed by the CSIRO’s Data61 and the Department of Industry, Science and Resources (DISR). Australia’ AI Ethics Framework (DISR 2019) defines the ethics principles which inform the practices found in this national assurance framework.

Explore the ethics framework on the DISR website.

Safe and responsible AI in Australia

Following consultation initiated DISR in 2023, the Australian Government committed to ensuring the use of AI systems in high-risk settings is safe and reliable while use in low-risk settings can continue largely unimpeded.

As set out in the government’s interim response to the consultation, this work will ensure AI is used safely and responsibly across the wider economy. A crucial element of this agenda is the role of government as an exemplar in the safe and responsible use of AI.

Read the Australian Government’s interim response on the DISR website.

NSW Artificial Intelligence Assurance Framework

When published in 2022, the NSW Government became the world’s first to mandate an assurance framework for the use of AI systems.

The NSW Artificial Intelligence Assurance Framework (Digital NSW 2022) assists project teams using AI to comprehensively analyse and document their projects’ AI specific risks. It also assists teams to implement risk mitigation strategies and establish clear governance and accountability measures.

Access the NSW Artificial Intelligence Assurance Framework on the digital.nsw website.

OECD principles for responsible stewardship of trustworthy AI

Committed to by the Australian Government when first published by the Organisation for Economic Cooperation and Development in 2019. These principles aim to foster innovation and trust in AI by promoting the responsible stewardship of trustworthy AI while ensuring respect for human rights and democratic values.

Read the recommendation containing the principles on the OECD website.

Bletchley Declaration on AI safety

Agreed to by Australia, alongside another 27 countries and the European Union, at the UK Government’s 2023 AI Safety Summit. The declaration affirms that AI should be designed, developed, deployed, and used in a manner that is safe, human-centric, trustworthy and responsible.

Read the text of the Bletchley Declaration as hosted on the DISR website.

Seoul Declaration for safe, innovative and inclusive AI

On 21 May 2024, the Australian Government agreed to 3 outcomes at the AI Seoul Summit, South Korea:

- Declaration for safe, innovative and inclusive AI

- Statement of Intent toward International Cooperation on AI Safety Science

- Ministerial Statement for advancing AI safety, innovation and inclusivity.

The agreements build on the Bletchley Declaration, confirming a shared understanding of opportunities and risks, and committing nations to deeper international cooperation and dialogue.

Read the text of the Seoul Declaration as hosted on the DISR website.

What is an AI system?

In November 2023, OECD member countries approved this revised definition of an AI system:

‘A machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment.’

To avoid definitional complexities the Australian, state and territory governments should consider practical guidance for staff to identify when AI assurance processes apply, such as when:

- the team identifies that the project, product or service uses AI

- a vendor describes its product or service as using AI

- users, the public or other stakeholders believe the project, product or service uses AI.